To them, it didn’t make sense. He was fluent in English and Spanish, extremely friendly and an expert in systems engineering. Why hadn’t he been able to hold down a call center job?

His accent, the friend said, made it hard for many customers to understand him; some even hurled insults because of the way he spoke.

The three students realized the problem was even bigger than their friend’s experience. So they founded a startup to solve it.

Now their company, Sanas, is testing out artificial intelligence-powered software that aims to eliminate miscommunication by changing people’s accents in real time. A call center worker in the Philippines, for example, could speak normally into the microphone and end up sounding more like someone from Kansas to a customer on the other end.

Call centers, the startup’s founders say, are only the beginning. The company’s website touts its plans as “Speech, Reimagined.”

Eventually, they hope the app they’re developing will be used by a variety of industries and individuals. It could help doctors better understand patients, they say, or help grandchildren better understand their grandparents.

“We have a very grand vision for Sanas,” CEO Maxim Serebryakov says.

And for Serebryakov and his co-founders, the project is personal.

People’s ‘voices aren’t being heard as much as their accents’

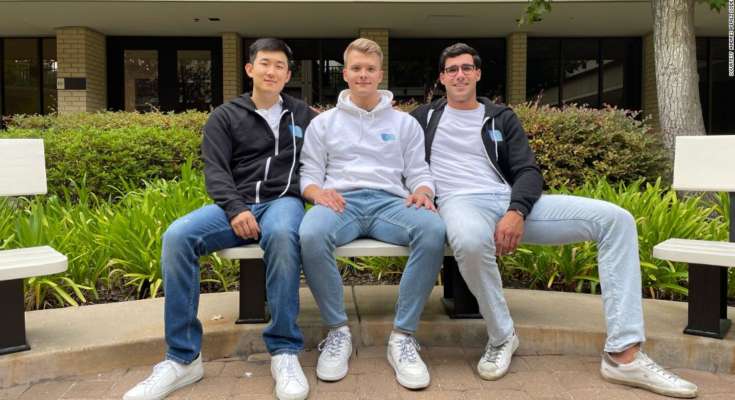

The trio who founded Sanas met at Stanford University, but they’re all from different countries originally — Serebryakov, now the CEO, is from Russia; Andrés Pérez Soderi, now the chief financial officer, is from Venezuela; and Shawn Zhang, now the chief technology officer, is from China.

They’re no longer Stanford students. Serebryakov and Pérez have graduated; Zhang dropped out to focus on bringing Sanas to life.

They launched the company last year, and gave it a name that can be easily pronounced in various languages “to highlight our global mission and wish to bring people closer together,” Pérez says.

Over the years, all three say they’ve experienced how accents can get in the way.

“We all come from international backgrounds. We’ve seen firsthand how people treat you differently just because of the way you speak,” Serebryakov says. “It’s heartbreaking sometimes.”

Zhang says his mother, who came to the United States more than 20 years ago from China, still has him speak to the cashier when they go grocery shopping together because she’s embarrassed.

“That’s one reason I joined Max and Andrés in building this company, trying to help out those people who think their voices aren’t being heard as much as their accents,” he says.

Serebryakov says he’s seen how his parents are treated at hotels when they come to visit him in the United States — how people make assumptions when they hear their accents.

“They speak a little louder. They change their behavior,” he says.

Pérez says that after attending a British school, he struggled at first to understand American accents when he arrived in the United States.

And don’t get him started about what happens when his dad tries to use the Amazon Alexa his family gave him for Christmas.

“We quickly found out, when Alexa was turning the lights on in random places in the house and making them pink, that Alexa does not understand my dad’s accent at all,” Pérez says.

Call centers are testing the technology

That’s created a growing market for apps that help users practice their English pronunciation. But Sanas is using AI to take a different approach.

The premise: that rather than learning to pronounce words differently, technology could do that for you. There’d no longer be a need for costly or time-consuming accent reduction training. And understanding would be nearly instantaneous.

Serebryakov says he knows people’s accents and identities can be closely linked, and he stresses that the company isn’t trying to erase accents, or imply that one way of speaking is better than another.

“We allow people to not have to change the way they speak to hold a position, to hold a job. Identity and accents is critical. They’re intertwined,” he says. “You never want someone to change their accent just to satisfy someone.”

Currently Sanas’ algorithm can convert English to and from American, Australian, British, Filipino, Indian and Spanish accents, and the team is planning to add more. They can add a new accent to the system by training a neural network with audio recordings from professional actors and other data — a process that takes several weeks.

The Sanas team played two demonstrations for CNN. In one, a man with an Indian accent is heard reading a series of literary sentences. Then those same phrases are converted into an American accent:

Another example features phrases that might be more common in a call center setting, like “if you give me your full name and order number, we can go ahead and start making the correction for you.”

The American-accented results sound somewhat artificial and stilted, like virtual-assistant voices such as Siri and Alexa, but Pérez says the team is working on improving the technology.

“The accent changes, but intonation is maintained,” he says. “We’re continuing to work on how to make the outcome as natural and as emotive and exciting as possible.”

Early feedback from call centers who’ve been trying out the technology has been positive, Pérez says. So have comments submitted on their website as word spreads about their venture.

How the startup’s founders see its future

That’s allowed Sanas to grow its staff. Most of the Palo Alto, California-based company’s employees come from international backgrounds. And that’s no coincidence, Serebryakov says.

“What we’re building has resonated with so many people, even the people that we hire. … It’s just really exciting to see,” he says.

While the company is growing, it could still be a while before Sanas appears in an app store or shows up on a cell phone near you.

The team says they’re working with larger call center outsourcing companies for now and opting for a slower rollout to individual users so they can refine the technology and ensure security.

But eventually, they hope Sanas will be used by anyone who needs it — in other fields as well.

Pérez envisions it playing an important role helping people communicate with their doctors.

“Any second that is lost either in misunderstanding because of the lost time or the wrong messaging is potentially very, very impactful,” he says. “We really want to make sure that there’s nothing lost in translation.”

Someday, he says, it could also help people learning languages, improve dubbing in movies and help smart speakers in homes and voice assistants in cars understand different accents.

And not just in English — the Sanas team is also hoping to add other languages to the algorithm.

The three co-founders are still working on the details. But how this technology could make communication better in the future, they say, is one thing that’s easy to understand.