Sony’s holding its Technology Day event to show off what it’s been working on in its R&D labs, and this year, we got some great visuals of tech the company’s been working on. Amidst the rehashes of the PS5’s haptics and 3D audio and a demo reel of Sony’s admittedly awesome displays for making virtual movie sets, we got to see a robot hand that Sony said could figure out grip strength depending on what it was picking up, a slightly dystopian-sounding “global sensing system,” and more.

Perhaps the most interesting thing Sony showed off was a headset that featured OLED displays with “4K-per-inch” resolution. While the headset Sony used in its presentation was very clearly something intended for lab and prototype use, the specs Sony laid out for the panels were reminiscent of the rumors swirling around the PlayStation VR 2.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/23071607/Screen_Shot_2021_12_07_at_16.30.05.png)

They don’t exactly line up, though; Sony said the headset it showed off was 8K, given the 4K display per eye, and the PS VR 2 will supposedly only be 4K overall with 2000 x 2040 pixels per eye. Still, it’s exciting that Sony is working on VR-focused panels, along with latency reduction tech for them. In a Q&A session for journalists, Sony wouldn’t answer questions about when the displays would show up in an actual product but said that various divisions were already looking into how they could integrate them into products.

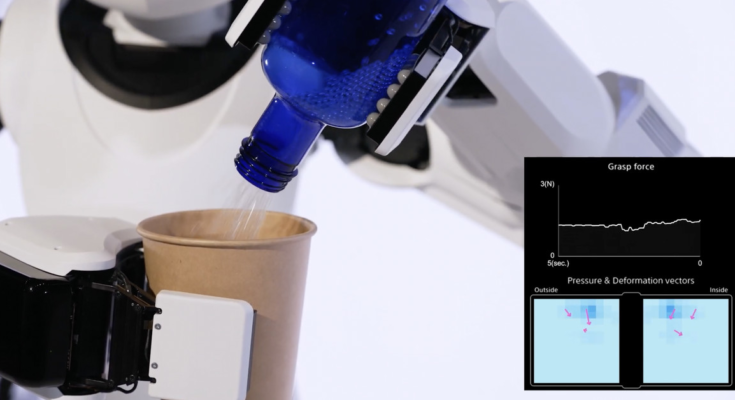

Sony also showed off a robotic grabber, a mechanized pincer that could be used to let a machine pick up objects. While that’s nothing new, Sony did say that its version had the ability to precisely control grip strength depending on what it was holding, letting it hold things tightly enough that they don’t slip (and adjusting if they do start to fall) without crushing delicate items like a vegetable or flower.

Sony says the grabber could be used to cook or line up items in a shop window, though to get that level of functionality, it would have to be paired with a way to move and AI that let it determine what objects it needed to pick up. It’s hard to imagine we’ll see that sort of thing in public any time soon, but it does make for an impressive demo. It’s also nice that Sony made it look very robotic rather than having it look like a hand — I’ve had my fill of eerily human-looking robot parts for this month.

Sony’s presentation also had some neat visuals to go with other projects, as well as another look at some devices we’ve seen before. It showed off some machine-learning supersampling tech (similar to Nvidia’s DLSS system), which it said it could use to improve resolution and performance for ray-traced rendering. A GIF of Sony’s comparison shots would ruin the quality, but you can check it out in the video below.

Finally, Sony talked about its “Mimamori” system, which it said is designed to watch over the planet. When I was watching the presentation in real time, I started to get a little worried when I saw this slide:

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/23071665/Screen_Shot_2021_12_07_at_16.56.11.png)

Sony went on to explain, though, that its idea was to use satellites to gather data from sensors placed all over the earth, made to collect information about soil moisture, temperature, and more. Its pitch is that it could help scientists gather information on how the climate is changing and help farmers adapt to those changes. Mimamori seemed like it was closer to a pitch than a prototype, but it shows that Sony is at least looking into how it can leverage some of its tech to help deal with climate change.

While some of the ideas Sony showed off seem like moonshots, it’s interesting to get a peek into what Sony’s doing in its labs, beyond creating PlayStations and Spider-Verse sequels. Even if we don’t end up getting consumer devices out of it, at least we got some pretty cool futuristic visuals.